This past weekend I put together an example of a Spring Roo-based

Jersey application that can run on Google App Engine.

All code in this post is derived from the example project at

https://github.com/marcisme/jersey-on-gae-example.

You should be able to get a project up and running by copy and pasting

the snippets in this entry, or you can clone the project and follow

along. This example uses Spring Roo 1.1.1, Jersey 1.5 and App

Engine SDK 1.4.0. A basic understanding of the involved technologies is

assumed.

Project

The first step is to create your project. Create a directory for the

project, fire up the Roo shell and then enter the commands below. This

will both create a new project and configure it to use App Engine for

persistence. Make sure to substitute an actual app id for your-appid

if you plan to deploy this example.

project --topLevelPackage com.example.todo

persistence setup --provider DATANUCLEUS --database GOOGLE_APP_ENGINE --applicationId your-appid

Dependencies

Once you’ve set up your project for GAE, you’ll need to add the

dependencies for Jersey and JAXB. If adding them manually, you can refer

to the dependencies section

of the Jersey documentation. In either case, you’ll need to add the

Jersey repository to your pom.xml.

<repository>

<id>maven2-repository.dev.java.net</id>

<name>Java.net Repository for Maven</name>

<url>http://download.java.net/maven/2/</url>

<layout>default</layout>

</repository>

Jersey

Once you’ve added the Jersey repository, you can use these command to

add the dependencies to your project.

dependency add --groupId com.sun.jersey --artifactId jersey-server --version 1.5

dependency add --groupId com.sun.jersey --artifactId jersey-json --version 1.5

dependency add --groupId com.sun.jersey --artifactId jersey-client --version 1.5

dependency add --groupId com.sun.jersey.contribs --artifactId jersey-spring --version 1.5

Unfortunately the jersey-spring artifact depends on Spring 2.5.x.

Because Roo is based on Spring 3.0.x, you need to add some exclusions to

prevent pulling in incompatible versions of Spring artifacts.

<dependency>

<groupId>com.sun.jersey.contribs</groupId>

<artifactId>jersey-spring</artifactId>

<version>1.5</version>

<exclusions>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-core</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-web</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-beans</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-context</artifactId>

</exclusion>

</exclusions>

</dependency>

JAXB

App Engine has

issues

with some versions of JAXB. I found 2.1.12 to work, while 2.1.13 and

2.2.x versions did not. This will hopefully change in the future. You

can add a dependency on JAXB with the following command.

dependency add --groupId com.sun.xml.bind --artifactId jaxb-impl --version 2.1.12

Spring

If you’re adding jersey to a project that uses Roo’s web tier, you’ll

already have the spring-web dependency in your project. If not, you’ll

need to add that too.

dependency add --groupId org.springframework --artifactId spring-web --version ${spring.version}

You can specify an explicit version, but if you created your project

with Roo, the spring.version build property should be set. Either way

you’ll want to exclude commons-logging.

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-web</artifactId>

<version>${spring.version}</version>

<exclusions>

<exclusion>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

Maven GAE Plugin

For some reason Roo uses a rather old version of the Maven GAE Plugin.

The latest version at the time of this writing is 0.8.1. In addition

to what Roo will have created for you, you’ll want to bind the start

and stop goals if you plan on running integration tests. See the

pom.xml in the example project

for an example of how to do that.

<plugin>

<groupId>net.kindleit</groupId>

<artifactId>maven-gae-plugin</artifactId>

<version>0.8.1</version>

<configuration>

<unpackVersion>${gae.version}</unpackVersion>

</configuration>

<executions>

<execution>

<phase>validate</phase>

<goals>

<goal>unpack</goal>

</goals>

</execution>

</executions>

</plugin>

Entity

Next you’ll want to create an entity, which you can do with the commands

below.

enum type --class ~.Status

enum constant --name CREATED

enum constant --name DONE

entity --class ~.Todo --testAutomatically

field string --fieldName description

field enum --fieldName status --type ~.Status

In order to leverage Jersey’s JAXB serialization features, you’ll need

to annotate your entity with @XmlRootElement. Set a default value for

status while you’re here.

import javax.xml.bind.annotation.XmlRootElement;

@RooJavaBean

@RooToString

@RooEntity

@XmlRootElement

public class Todo {

@Enumerated

private Status status = Status.CREATED;

...

Resource

Here’s a very simple resource for the entity we created earlier. In

addition to the JAX-RS annotations, you also need to annotate the class

with @Service, which makes the class eligible for dependency injection

and other Spring services. This resource will support both XML and JSON.

package com.example.todo;

import org.springframework.stereotype.Service;

import javax.ws.rs.*;

import java.util.List;

@Service

@Consumes({"application/xml", "application/json"})

@Produces({"application/xml", "application/json"})

@Path("todo")

public class TodoResource {

@GET

public List<Todo> list() {

return Todo.findAllTodoes();

}

@GET

@Path("{id}")

public Todo show(@PathParam("id") Long id) {

return Todo.findTodo(id);

}

@POST

public Todo create(Todo todo) {

todo.persist();

return todo;

}

@PUT

public Todo update(Todo todo) {

return todo.merge();

}

@DELETE

@Path("{id}")

public void delete(@PathParam("id") Long id) {

Todo.findTodo(id).remove();

}

}

Final Configuration

At a minumum, you’ll need to have Spring’s OpenSessionInView and

Jersey’s SpringServlet filters set up in your web.xml. As with the

spring-web module dependency, you will have to create a web.xml in

src/main/webapp/WEB-INF if you don’t already have one.

<web-app>

<!-- Creates the Spring Container shared by all Servlets and Filters -->

<listener>

<listener-class>org.springframework.web.context.ContextLoaderListener</listener-class>

</listener>

<context-param>

<param-name>contextConfigLocation</param-name>

<param-value>classpath*:META-INF/spring/applicationContext*.xml</param-value>

</context-param>

<!-- Ensure a Hibernate Session is available to avoid lazy init issues -->

<filter>

<filter-name>Spring OpenEntityManagerInViewFilter</filter-name>

<filter-class>org.springframework.orm.jpa.support.OpenEntityManagerInViewFilter</filter-class>

</filter>

<filter-mapping>

<filter-name>Spring OpenEntityManagerInViewFilter</filter-name>

<url-pattern>/*</url-pattern>

</filter-mapping>

<!-- Handles Jersey requests -->

<servlet>

<servlet-name>Jersey Spring Web Application</servlet-name>

<servlet-class>com.sun.jersey.spi.spring.container.servlet.SpringServlet</servlet-class>

</servlet>

<servlet-mapping>

<servlet-name>Jersey Spring Web Application</servlet-name>

<url-pattern>/*</url-pattern>

</servlet-mapping>

</web-app>

You may also need to change the project’s packaging type to war.

<groupId>com.example.todo</groupId>

<artifactId>todo</artifactId>

<packaging>war</packaging>

<version>0.1.0.BUILD-SNAPSHOT</version>

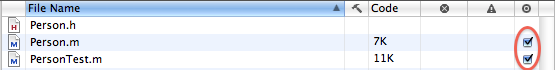

Testing

You can now compile and run the application locally with mvn clean

gae:run.

> mvn clean gae:run

...

INFO: The server is running at http://localhost:8080/

The following curl commands can be used to interact with the running

application.

# list

curl -i -HAccept:application/json http://localhost:8080/todo

# show

curl -i -HAccept:application/json http://localhost:8080/todo/1

# create

curl -i -HAccept:application/json -HContent-Type:application/json \

http://localhost:8080/todo -d '{"description":"walk the dog"}'

# update

curl -i -HAccept:application/json -HContent-Type:application/json \

http://localhost:8080/todo \

-d '{"description":"walk the dog","id":"1","status":"DONE","version":"1"}' \

-X PUT

# delete

curl -i http://localhost:8080/todo/1 -X DELETE

Once you’re happy with your application, you can upload it to App

Engine. If you download the example project

I created, you can build locally with mvn clean install -Dtodo-appid=<your-appid>.

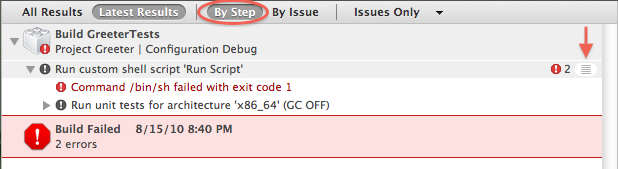

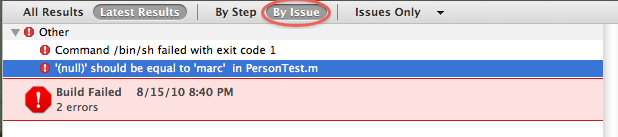

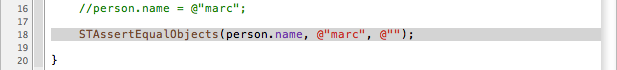

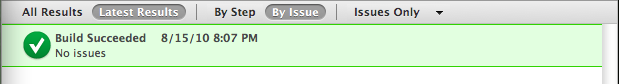

The local App Engine server will be used to run some basic integration

tests during the build. After the app is built, you can deploy it with

mvn gae:deploy. You will be prompted for your login information if you

have not set up your your credentials in your settings.xml.

Once deployed, you can run the included integration test against the

live server with mvn failsafe:integration-test -Dgae.host=<your-appid>.appspot.com -Dgae.port=80.

There is also a simple client you can use to write your own tests or

experiment with.

Having some level of integration tests for any GAE apps you write is

very important, as there are things that do not work consistently

between the local development server and the real App Engine servers. Be

aware that you can access the local development server console at

http://localhost:8080/_ah/admin,

which will let you browse the datastore amongst a few other things. Once

deployed to the real App Engine, you’re best source of information is

the application log. Be sure you’re looking at the correct version;

there’s a drop-down menu in the upper left area of the screen that will

let you choose the version of the logs you want to examine.

]]>